Introduction

The RedEdge sensor is a multispectral camera that can

capture five distinguishable spectral bands to generate precise and

quantitative information on the vigor and health of crops. These 5 spectral

bands include Blue, Green, Red, Red Edge, and Near Infrared (NIR). Some of the

specs on the RedEdge sensor includes a 1 capture per second of all bands, 8.2

cm/pixel at 120 m AGL, 150 grams, and powered by 5.0 volts. The RedEdge sensor

can provide much greater detail when it comes to imagery over the Red, Green,

and Blue (RGB) sensor. This includes using the RedEdge sensor to gain a greater

understanding specific agricultural areas of interest to study.

Methods

Copying data in this lab included making a new folder to

store all of the RedEdge imagery and any further information or maps that are

created throughout this lab. This folder will be used when processing the

RedEdge imagery.

Next step is to process the Imagery in Pix4D that was copied

over into the specified folder. When creating the new project, make sure to

select the “Ag-Multispectral”(figure 1) template rather than the 3D template

like what we had used in the previous labs. When opening the processing

options, select all the necessary options shown in figure 2. Once all the

correct options are selected, processing can begin. This imagery will take

longer to process than the previous labs did.

Figure 1. Ag Multispectral processing template is selected for value added data analysis

Figure 2. Processing options dialog box

After the imagery is done processing, the next step is to

create an image composite. This is done using a series of geotiffs, one for

each spectral band. First, go into ArcMap or ArcCatalog and create a

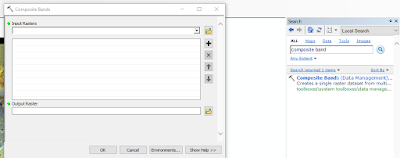

geodatabase within the newly created folder. Next, in the search box, type in

Composite Bands tool (figure 3). With this tool, enter the bands in the correct

order starting with Blue, Green, Red, RedEdge, and NIR. Name and save the

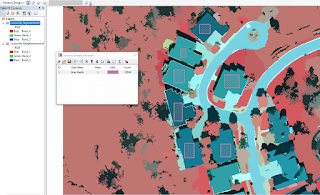

composite to the new geodatabase. Once the composite is created, Arcmap can

display different properties of the image by altering the RGB composite using

the 5 different spectral bands. This is found in the Layer properties dialog

box in the symbology tab with the RGB Composite selected on the left hand side (figure 4).

Figure 3. Composite Bands tool dialog box from the Data Management toolbox

Figure 4. Layer properties dialog box where the Red, Green, and Blue channels can be manipulated with the 5 different RedEdge sensor spectral bands

The first map is just the regular RGB display with spectral bands 3, 2, and 1.Various other types of maps are shown below such as Normalized

Difference Vegetation Index (NDVI), False Color IR, and the permeability of the

Fall Creek house area of interest. The NDVI required using the Image Analysis

Tool under the Windows tab. In the Image Analysis window, select the Composite

raster and under processing select the NDVI button (figure 5). Then I created values to

display the vegetation health as shown in Map 1. The False color IR uses the higher

spectral bands within the Red, Green, and Blue to be changed. I used Red as

Band 5, Green as Band 3, and Blue as Band 2. With this I created a map showing

the False color IR values of the vegetation in this area (Map 2).

Map 4 brings

in the skills and knowledge from the previous lab on how to obtain permeable

and impermeable surface data.First, extracting the spectral bands will be used to distinguish urban features from natural features. The tool used here is the "Extract Bands" to create a new image with only three bands. Here the three bands for the false color IR will be bands 5, 3, and 2 in that order so that the vegetation shows up red and the roofs and roads appear darker green to gray. Next, requires using the Segment Mean Shift tool (figure 6). This tool makes it easier to classify the image. After the image becomes easy to classify, the Image Classification tool is used to label the differences in vegetation, roads, driveway, and roof (figure 7). After saving the classification, the next tool to use is called "Train Support Vector Machine Classifier" (figure 8). This tool creates a raster and allows you to classify the features the way you want. After the classified

raster is completed, the coded values of 0 (impermeable) and 1 (permeable) are inputted to distinguish

between the impermeable surfaces (house, driveway, roads) and the permeable

surfaces (yard and fields).

Figure 5. Image Analysis window that is highlighting the NDVI leaf selection under "Processing"

Figure 6. Segment Mean Shift tool window for inputting the RGB image.

Figure 7. The training sample manager allows you to group similar classes together such as similar vegetation, roads, driveways, or roofs

Figure 8. Train Support Vector Machine Classifier tool window

Results

The first map shows the regular RGB image with bands 3, 2, and 1 all placed in the correct spectral band placement. The lower second and third maps display a significant pattern with the vegetation having a bright green to yellow color within the NDVI map and a red to pink color in the False color IR map. The roads and house however display a bright orange to yellow color in the NDVI map while the False color IR map is a dark green to bright blue. The fourth map reveals the difference between the permeable and impermeable layers in the area of interest. Through the process of creating the fourth map however there was some difficulties so the car in the driveway and a small spec of the driveway seem to show as permeable however we know this is untrue.

Map 1. RGB image of the Fall Creeak area of interest

Map 2. NDVI map of the Fall Creek area of interest

Map 3. False color IR map of the Fall Creek area of interest

Map 4. Map of the Fall Creek area of interest Permeable vs. Impermeable layers