Introduction

In Pix4D software, ground control points (GCP’s) are used to

align the image with the surface of the earth so that your resultant products

are spatially and geographically accurate. GCP’s are first collected out in the

field from a GPS system with images that have specific coordinates tied to them.

The reason we use GCP’s in Pix4D software is to enhance the spatial context of

the data, allowing the final products to be more geometrically accurate than

the raw data collected with the GPS system.

Methods

In this lab GCP’s have already been obtained from the field

and imported onto the computer so that they can be used on the images provided

from the Litchfield flight logs. Therefor moving tying down the GCP’s in Pix4D

is what will be discussed in the section below. Since last week was getting

familiar with Pix4D and how to process images, we will now use GCP’s to further

enhance the accuracy of the images, process those enhanced images, and have a

more geospatially correct final product.

First, when opening up Pix4D, name the project with the

specified characteristics of the project. Then create a workspace in which you

can keep all your data saved in as shown in figure 1. On the next page, add the

images of the flight you want and make sure the “Shutter Model” is set to

Linear Rolling Shutter.

Figure 1. Showing the new project window with the name and desired workspace

Next step is to import GCP’s by clicking on the “Import

GCP's…” in the GCP/MTP Manager. When adding GCP’s you have to make sure your latitude and longitude

numbers are correct. Y corresponds with latitude, and X corresponds with

longitude (figure 2). If the latitude and longitude numbers are reversed, your

data will be thrown way off and the images will not line up spatially correct. If

it’s correct, the GCP’s will show up in your flight area.

After all the information is correct and GCP’s are imported,

run the initial processing because tying the GCP’s to the imagery is way easier

and more efficient in rayCloud rather than doing it manually. Make sure you

uncheck steps 2 and 3, then you can press start to run the initial processing

(figure 3).

Once the initial processing is finished, go into rayCloud

editor and click on a GCP point to bring up the images where that GCP is

located. Find the GCP within the image and click on the center to tie the image

down to the GCP (figure 4). For each GCP, use 2 to 3 points in order to tie

down the image. Once the GCP’s are tied down, go to “automatic marking” and

“apply.” Next, go under “Process” and select, rematch and optimize.

Figure 3. Showing where to check step 1, uncheck steps 2 and 3.

Figure 4. Picture showing where the images are tied down to the GCP's in rayCloud.

Since there are two sets of data that need to be put

together, the merge project selection must occur at the first window instead of

selecting new project. This allows the processing of each dataset to happen

faster. After the projects have been merged, the finished product can be used

in ArcMap to make maps.

Results

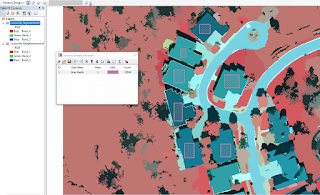

The new DSM can now be brought into ArcMap in order to view

all of the data and to create various maps. The map I have created below in

figure 5 is an elevation map of Litchfield that also shows all of the ground control

points (GCP). Since these GCP’s have been added, this new map is more accurate

than the map created in the first Pix4D lab.

Figure 5. Map created from Pix4D Litchfield Flights 1 and 2 data.

Conclusion

Collecting GCP’s and using them as tie down points with the

imagery can greatly increase the accuracy of your maps. This is a great way to

ensure the accuracy with UAS platforms and collect more accurate data such as

volumes within the Pix4D software. Merging two projects also is great to create

a larger connected map that’s within the same location.