Introduction

This lab consists of calculating volumes of stockpiles in both Pix4D and ArcMap using the Litchfield data from a previous lab. In the lab there are three different methods on how to calculate the volumes of stockpiles with different software. The first will be using Pix4D, then ArcMap with Raster Clip, and ArcMap with TIN. This is a very quick and efficient way of calculating the leftover stockpiles in mines, quarries, or other related applications.Using the UAS is also very accurate, detailed, cost effective, and time efficient for whomever is wanting to obtain the volumes of stockpiles.

Operations Utilized

Raster Clip - The clip is a data management tool that allows a portion of a raster dataset to be cut out of the original feature dataset.

Raster to TIN - Raster to TIN is a 3D analyst tool that converts a raster into a triangulated irregular network (TIN) dataset.

Add Surface Information - Add surface information is another 3D analyst tool that produces attributes to features with spatial information deriving from a surface.

Surface Volume - Surface Volume is a 3D analyst to that calculates the area and volume of a refion between a surface and a reference plane.

Polygon Volume - Polygon Volume is a 3D analyst tool that calculates the volume and surface area between a polygon and terrain or TIN surface.

Cut Fill - Cut fill is a 3D analyst tool that calculates the volume change between two surfaces. This tool is typically used for cut and fill operations.

Methods

Pix4D stockpile volume calculations

Starting this lab will consist of creating a new folder for the Litchfield data and copying that data to the new folder. This process will take a fair amount of time because of the large amount of data being copied over. After the Litchfield map is opened up in Pix4D, the first place to go is to the volumes tab on the left hand side of the project. Next select the "add volume" button to add the 3 different stockpiles. After it is selected, you can start digitizing your 3 stockpiles by digitizing around the base of each stockpile as shown in figure 1. After the digitizing is done, select calculate to get the volumes of the stockpile which is also shown in figure 1. The stockpile to the far right has an estimated volume of 978.60 cubic meters, the middle pile is 527.37 cubic meters, and the smallest pile up top is 16.13 cubic meters.

Figure 1. Pix4D area of all 3 stockpiles used for volumetric analysis

ArcMap raster clip stockpile volume calculations

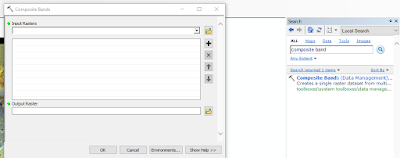

First part of this method is to open the mosaic that was from Pix4D and create a new geodatabase with 1 feature class for each individual stockpile. Next step is to digitize the three piles by using the Extract By Mask tool with the correct parameters for each individual pile as shown in figure 2.

Figure 2. Extract by mask tool with the parameters for stockpile 1

Next, use the surface volume tool to calculate the area and volume of each stockpile with the correct parameters as shown in figure 3. In order to find a reference plane use the identify tool to get information on the surface elevation on each stockpile given by the pixel value field. After the tool is ran, an attribute table is created with values of plane height, area, and volume as shown in figure 4. In figure 5, model builder is shown how to use the tools for stockpile 1. This can be used for the other two stockpiles as well.

Figure 3. Surface volume tool inputs

Figure 4. Attribute table after the surface volume tool is ran

Figure 5. Data flow model of stockpile one using raster clip

ArcMap TIN stockpile volume calculations

The first step to finding the volumes as a TIN, you must convert the three raster clips into a TIN of course. This is done using the Raster to TIN tool as shown in figure 6. Now that there are TIN files for each of the three stockpiles, the next tool to use is the Add Surface Information tool with the correct parameters in place (figure 7). The last tool you need to use is the Polygon volume tool to calculate the volume and surface area of the TIN (figure 8). Figure 9 displays the data flow model for the TIN volume calculations.

Figure 6. Raster to TIN tool inputs

Figure 7. Add surface information tool inputs

Figure 8. Polygon Volume tool inputs

Figure 9. Data flow model of the TIN for stockpile

Results

The table below displays the three different calculations used from Pix4D, Raster, and TIN.

Table 1. Results table for volumetric analysis

Map of the Litchfield mine site with the stockpiles used for volumetric analysis

Discussion

In the results it shows that the Pix4D has lower calculations than both the ArcMap Raster and TIN calculations. The Pix4D method was very simple and to the point just by digitizing the base of each stockpile and having the program calculate the rest. With ArcMap Raster and TIN calculations however multiple tools needed to be use correctly with the correct parameters inputted.